SUMMARY

This document presents specific software testing criteria, important concepts and supporting tools. Functional, structural – based on control flow and data flow – and flexibility-based evaluation criteria are discussed. Our focus is on mutation testing. Despite its effectiveness in finding bugs, even in small programs, mutation testing has cost problems related to the large number of mutations produced and the determination of these mutations. In order to reduce the cost of using transformation testing, many theoretical and empirical investigations have been carried out. A summary of the most important historical studies relating to the assessment of the reform is presented. These studies aim to establish a testing strategy that allows the use of change testing when testing commercial software products. Experimental work and related problems are demonstrated using PokeTool, Proteum, and PROTEUM=IM tools, which support structural processing, mutant analysis criteria, and interface modification criteria, respectively. Other community initiatives and efforts to automate these processes are also identified.

Keywords: software testing; interface; computing.

1. INTRODUCTION

Software engineering has evolved significantly in recent decades, trying to establish techniques, criteria, methods and tools for software production as a result of the increased use of computerized systems in practically all areas of human activity, which results in a growing demand for quality and productivity from the point of view both, the production process and the manufactured products. Software engineering can be defined as a subject area that applies engineering principles to produce high-quality software at low cost. Through a series of steps involving the development and application of methods, techniques and tools, software engineering provides the means to achieve these goals.

The software development process includes a series of activities in which, regardless of the techniques, methods and tools used, errors can still occur in the product. Activities grouped under the name “Software Quality Assurance” are introduced throughout the development process, including operations.

The testing function consists of the dynamic analysis of the product, being the right task to identify and eliminate the remaining errors. Systematic testing is a key activity to move up to Level 3 of the Software Engineering Institute – SEI CMM model from a process quality standpoint. Furthermore, the knowledge derived from testing activities is important for troubleshooting, maintenance, and software reliability measurement tasks. It is important to note that testing activities have been identified as the most expensive activities in software development. Despite this, it is evident that much less is known about software testing than other software development aspects and/or activities.

Testing software products actually consist of four steps: test planning, test case design, performance, and evaluation of test results. These activities should be developed during the software development process itself and, usually, they occur in three stages of testing: unit, integration and system. Unit testing focuses on a small unit of the software project, that is, it tries to identify understanding and implementation errors in each software module separately. Integration testing is a structured activity used in the compilation of a program structure to detect errors related to integration between modules. The goal is to create a program structure that has been determined by design, based on unit-tested modules. System testing, which is performed after system integration, aims at identifying failures in operations and performance that are not in compliance with the specifications.

2 THEORETICAL BACKGROUND

In general, software testing criteria are essentially determined based on three different techniques: functional, structural, and fault-based. In functional engineering, testing criteria and requirements are determined based on the software specification function; in structural engineering, criteria and requirements are essentially derived from the properties of a specific implementation to be tested; and in the error-based technique, testing criteria and requirements are derived from the knowledge of typical errors committed in the software development process. We can also observe the establishment of criteria for generating test sequences based on machines with finite states. The latter have been used in the context of validation and testing of reactive and object-oriented systems (BEIZER, 1995).

Tests can be classified in two ways: specification-based tests and program-based tests. According to this classification, technical-functional criteria are based on specifications, and both structural and fault-based criteria are considered implementation-based criteria (BEIZER, 1995).

In specification-based testing (or ‘black-box’ testing), the goal is to determine whether the program meets the functional and non-functional requirements specified. The problem is that the existing specifications are generally informal and, therefore, the determination of the total coverage of the specification achieved through a given set of test cases is also informal. However, specification-based testing criteria can be used in any context (procedural or object-oriented) and in any testing phase without the need for customization. Examples of these criteria are: equivalence classification, threshold analysis, cause and effect diagram and state-based testing (HETZEL, 1988).

In contrast to specification-based testing, program-based testing (or ‘white-box’ testing) requires the analysis of the source code and selection of test cases that will test parts of the code, rather than its specification.

It is important to emphasize that the testing techniques should be seen as complementary, and the question arises as to how they can be deployed in such a way as to best use the benefits of each one in a testing strategy that results in a high quality testing activity, or to be effective and cheap. Testing techniques and criteria provide the developer with a systematic and theoretically sound approach, as well as with a mechanism that can help to assess the quality and appropriateness of the testing activity. Testing criteria can be used to generate a set of test cases, as well as to assess the adequacy of these sets (HETZEL, 1988).

Given the diversity of established criteria and the complementary nature of recognized testing techniques and criteria, a crucial point arising from this perspective is the selection and/or determination of a testing strategy, which ultimately involves the selection of testing criteria, so that the benefits of each criteria are combined for a higher quality testing activity. Theoretical and empirical studies on testing criteria are of great importance for the formation of this knowledge and provide subsidies for the establishment of cost-effective and highly effective strategies. There are several efforts by the scientific community in this sense (MYERS, 1979).

It is essential to develop testing tools that may support the actual testing activities, as these activities are very prone to errors and, in addition, they are not productive when applied manually, as well as support empirical studies aimed at evaluating and supporting the comparison of various testing criteria. Thus, the availability of testing tools provides greater quality and productivity for testing activities. Scientific efforts in this sense can be observed in the literature (MYERS, 1979).

As seen, the criteria based on data flow analysis and the mutation analysis criterion have been intensely studied by several researchers in different aspects. The results of these studies evidence that these criteria, which are currently being investigated mainly in science, partly in cooperation with the industry, may represent the state of practice in software production environments in the medium term. Strong evidence in this regard is the involvement of Telcordia Technologies (USA) in the development of xSuds, an environment that supports the application of criteria based on data flow analysis (CRESPO et al, 2002).

Tests have several limitations. In general, the following problems cannot be solved: given two programs, whether they are equivalent; given two sequences of instructions (paths) of one single program or different programs, if they calculate the same function; and a given path, whether it is executable or not, that is, if there is a set of input data that leads to the execution of this path. Another key limitation is random correction – the program may randomly return a correct result for a given d 2D data item, that is, a specific data item runs, satisfies a testing requirement, and does not indicate the presence of an error ( CRESPO et al, 2002).

A program P with input range D is said to be correct with respect to a specification S if S(d) = P(d) for each d data element d belonging to D, that is, if the program behavior is correct according to the expected behavior for all input data. Given two P1 and P2 programs, if P1(d) = P2(d), for any d 2 D, P1 and P2 will be equivalent. Software testing assumes that there is an oracle – the tester or some other mechanism – that can determine, within time limits, for each data item d 2 D if S(d) = P(d) and reasonable effort, an oracle simply decides that the output values are correct. It is known that exhaustive tests are impractical, that is, testing all possible elements of the input domain is usually expensive and requires much more time than that available. However, it should be noted that there is not a universal testing procedure that can be used to prove the correctness of a program. While it is not possible to prove through testing that a program is correct, if done systematically and carefully, testing will help building confidence that the software will perform the specified functions, and some of these functions should be demonstrated with minimal characteristics from the point of view of quality (IEEE Computer Society, 1998).

Therefore, two questions are at the core of testing activities: how should testing data be selected? How can one decide whether a program P has been sufficiently tested? Criteria for selecting and evaluating testing sets are crucial to the success of testing activities. These criteria should indicate which test cases should be used to increase the likelihood of bug detection or, if bugs are not detected, to build a high level of confidence in the correctness of the program. A testing case consists of an ordered pair (d; S(d)) where d 2 D and S(d) is the expected output (IEEE Computer Society, 1998).

There is a strong agreement between selection procedures and suitability criteria for testing cases, since, given a suitability criterion C, there is an MC selection procedure that states: Choose T, so that T is suitable for C. Similarly, in an M selection procedure, there is a CM suitability criterion that says: T is suitable if it was selected after M. Therefore, the term “testing case suitability criterion” (or simply “test criterion”) is also used for assignment selection procedures. Given P, T and a criterion C, the set of test cases T is considered C suitable for testing P, if T satisfies the testing requirements specified by criterion C. Another important issue in this context is, given an adequate T C1 sentence, which C2 test criterion would help improving T? This issue has been analyzed in theoretical and empirical studies (CRAIG; JASKIEL, 2002).

In general, it can be said that the minimum properties that a test criterion C must meet are:

- To ensure coverage of all conditional branches from a flow control point of view;

- To require at least one use of each computational result from a data flow point of view; and

- They require a finite set of test cases (CRAIG; JASKIEL, 2002).

The advantages and disadvantages of software testing criteria can be evaluated through theoretical and empirical studies. From a theoretical point of view, these studies were mainly supported by an inclusion ratio and the examination of the complexity of the criteria. The inclusion ratio creates a partial order among the criteria, establishing a hierarchy among them. A criterion C1 must contain a criterion C2 if, for any program P and any set of test cases, T1 is eligible for C1, T1 is also eligible for C2 and there is a program P and a T2 set eligible for C2, except C1. Complexity is defined as the maximum number of test cases required by a criterion in the worst case. In the case of criteria based on data flow, these have an exponential complexity, which motivates the performance of empirical studies to determine the costs of applying these criteria from a practical point of view. More recently, some authors have addressed the issue of the effectiveness of testing criteria from a theoretical perspective and defined other relationships that capture the ability to detect flaws in test criteria (CRAIG; JASKIEL, 2002).

From the point of view of empirical studies, normally three aspects are analyzed: costs, effectiveness and strength (or difficulty of satisfaction). The cost factor reflects the effort required for the criterion to be applied; in general, it is measured by the number of test cases needed to meet the criterion (CRAIG; JASKIEL, 2002).

An activity frequently mentioned in the performance and analysis of testing activities is the coverage analysis, which essentially consists of identifying the percentage of elements required by a certain test criterion that was exercised by the set of test cases used. Using this information, the set of test cases can be improved by adding new test cases to practice elements not yet covered. Under this perspective, the knowledge about the theoretical restrictions inherent to the testing activity is essential, since the required elements may not be executable and, in general, the testers involved in determining the non-executability of a given test requirement involve participation (ZALLAR, 2001).

As already mentioned, functional, structural and error-based testing techniques are used for performing and assessing the quality of testing activities. Such techniques differ in the origin of the information used in the analysis and construction of sets of test cases. This text presents the last two techniques in more detail, more specifically, the criteria for potential uses, the mutant analysis criterion and the interface mutation criterion, as well as the support tools PokeTool, Proteum and PROTEUM=IM. These criteria illustrate the main aspects relevant to software coverage testing. For a more comprehensive view, an overview of the functional technique and the best-known criteria of this technique is presented herein (ZALLAR, 2001).

Functional testing is also known as “black-box testing” because it treats software as a box whose contents are unknown, and of which only the outside, that is, the input data provided, and the responses generated as output can be seen. In the functional test technique, system functions are verified without worrying about implementation details (CENPRA, 2001).

Functional testing consists of two main steps: identification of the functions that the software should perform, and creation of test cases that can be used for checking if the software performs these functions. The functions that a software must have result from its specifications. Therefore, a well-designed specification according to user requirements is essential for this type of test (CENPRA, 2001).

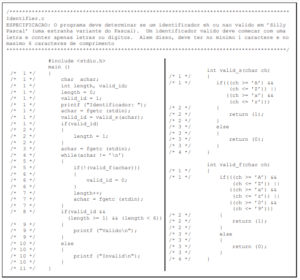

Figure 1 – Example program

Subtitle: SPECIFICATION: The program must determine whether or not an identifier is valid in ‘Silly Pascal’ (a strange variant of Pascal). A valid identifier must start with a letter and contain only letters or digits. In addition, it must have a minimum of 1 character and a maximum of 6 characters of length.

Source: CENPRA, 2001.

One of the problems of functional criteria is that the program specification is usually done in a descriptive and non-formal way. Therefore, testing requirements derived from such specifications are also somewhat imprecise and informal. Consequently, it is difficult to automate the application of such criteria, which are generally limited to manual application. On the other hand, for the application of these criteria, it is essential to identify the inputs, the function to be calculated and the output of the program, which are in practically all test phases (unit, integration and system) (CENPRA, 2001).

3. FINAL CONSIDERATIONS

This text presents some software testing criteria and concepts, focusing on those that are considered most promising in the short- and medium-term: the data flow-based criterion, the mutant analysis criterion and the interface mutation criterion. The testing tools PokeTool, Proteum and PROTEUM=IM were also presented, as well as several other initiatives and efforts to automate these criteria, given the importance of this aspect for the quality and productivity of the testing activities themselves.

It should be noted that the concepts and mechanisms developed herein are applicable in the context of the object-oriented software development paradigm, with the necessary adaptations. First, the application of these criteria will be examined when testing C and Java programs. In both, intra-method and inter-method testing, the application of mutant analysis or interface mutation criteria is practically direct. Later on, when considering other interactions in addition to the specific features of object-oriented language, such as dynamic coupling, inheritance, polymorphism and encapsulation, it may be necessary to develop new mutation operators to model the typical errors found in this connection.

As mentioned, testing activities play an important role in software quality, both from the point of view of processes and the product. For example, from the point of view of the quality of software development process, systematic testing is an essential activity to advance to level 3 of the SEI CMM model. In addition, the set of information obtained in testing activities is important for debugging activities, reliability estimates and software maintenance.

REFERENCES

BEIZER, B. Black-Box Testing: techniques for funcional testing of software and system. New York. 1995.

HETZEL, B. The Complete Guide to Software Testing. 2nd Edition, John Wiley & Sons. 1988.

MYERS, G. J. The Art of Software Testing. Wiley, New York. 1979.

CRESPO, A. N.; MARTINEZ, M. R.; JINO, M.; ARGOLO, M. T. Application of the IEEE 829 Standard as a Basis for Structuring the Testing Process; The Journal of Software Testing Professionals. 2002.

IEEE COMPUTER SOCIETY. IEEE Std 829: Standard for Software Test Documentation. 1998.

CRAIG, R. D.; JASKIEL, S. P. Systematic Software Testing. Artech House Publishers. 2002.

ZALLAR, K. Are You Ready for Test Automation Game? STQE – Software Testing and Quality Engineering Magazine. 2001.

RENATO ARCHER RESEARCH CENTER – CENPRA. Software Process Improvement Division – DMPS; RT – “Guia para Elaboração de Documentos de Teste de Software”. Technical Report. 2001.