In the last years, and up to the present, Artificial Intelligence (AI) has provided various advancements in previously unimaginable applications. These advances have been increasing efficiency in daily processes by automating complex tasks and improving the performance of individuals and organizations across diverse fields. One area that has stood out is the application of Natural Language Processing (NLP), which relies on the use of the Large Language Models (LLMs) to create solutions, products, and applications. These models can extract information, answer questions, perform translations, and other specific tasks depending on the business problem.

Applications created using LLMs often require specific context to obtain personalized and precise responses. Usually, the necessary context is found in private documents belonging to individuals or organizations. For example, for a chatbot to assist users (e.g., employees, customers, partners, etc.) with specific and internal product-related questions, it is necessary to provide additional information that includes product specificities. In this scenario, a well-known technique called RAG (Retrieval-Augmented Generation) provides additional knowledge not presented during model training.

RAG is used for LLMs text generation, which through information retrieval capabilitiesallows the model to generate responses based on specific contexts. RAG has various components that can be adapted and modified depending on the complexity of the solution being developed.

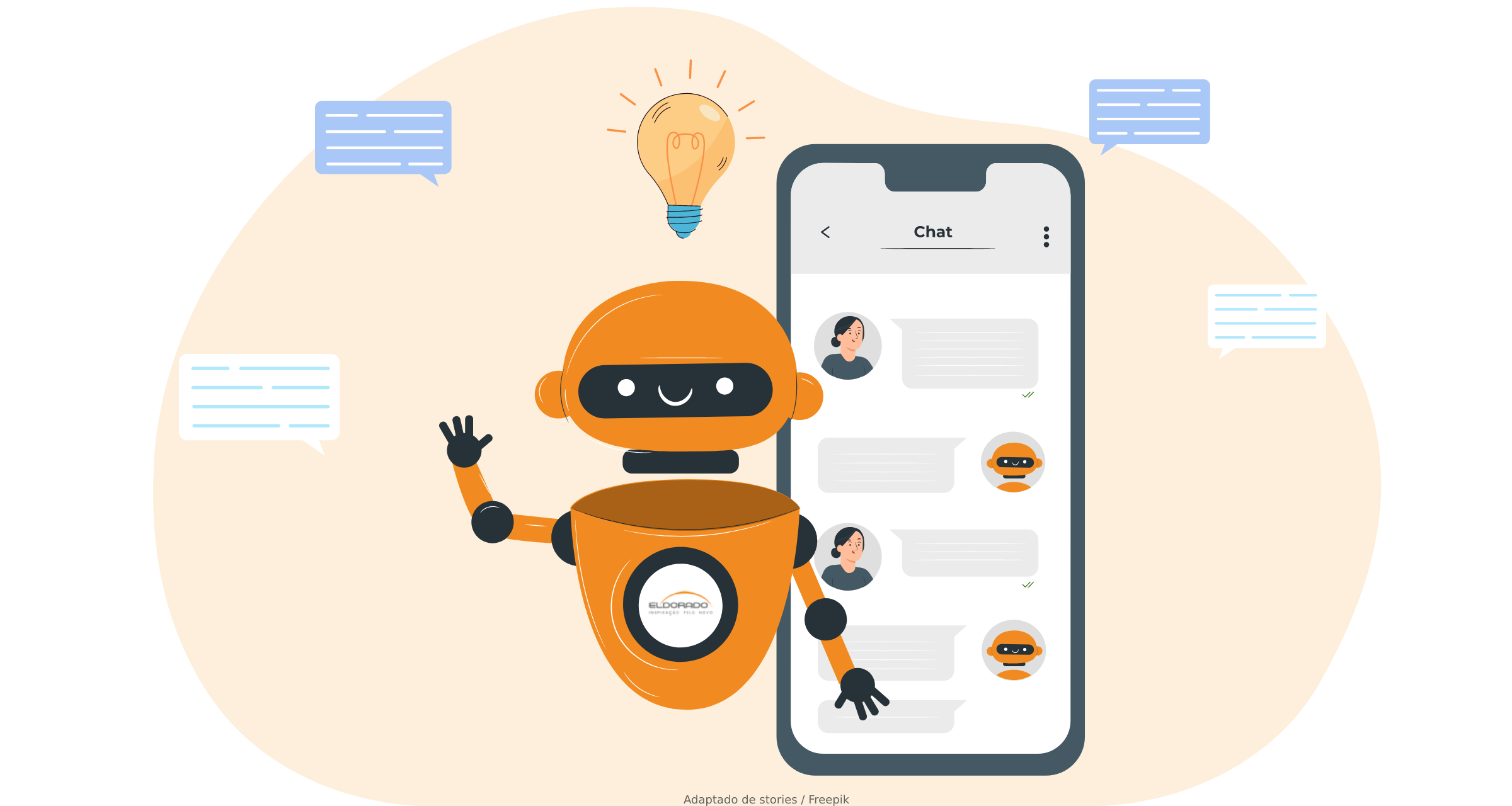

Below is an example of RAG for a chatbot application usingprivate data:

Each step of this diagram presents customizations according to the application and thetask of interest. For example, we can apply different techniques to retrieve information from the documents according to their format (depending on whether they are just text, or include images, audio, or tables), use a range of mechanisms for identifying which documents are most relevant through similarity analysis, use different retrieval parameters such as text slice size to retrieve document information.

It is also possible to work on other components, such as prompt engineering techniques to improve the LLM’s understanding of the returned data, or the model selection depending on cost, efficiency, and performance needs (both in response quality and processing time).

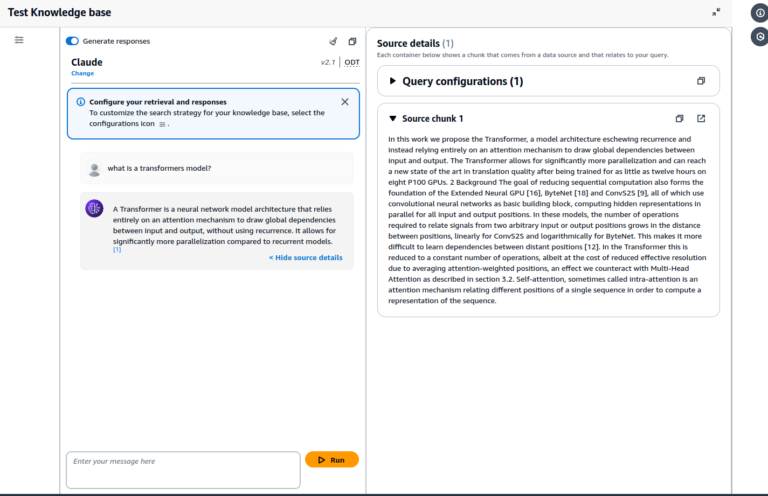

ELDORADO’s AI specialists explore all these possibilities to offer solutions with the most suitable tools and techniques for each client’s needs. One of the solutions we offer, considering privacy, flexibility, and agile development, involves using Amazon Bedrock service This service offers a variety of features to help developers build conversational AI applications using RAG in a highly customizable way.

Some benefits of using Bedrock for developing solutions are:

- Secure connection between models and data sources: Provides a secure way to connect your LLMs to your data sources, ensuring that your data is protected.

- Easy recovery of relevant data for prompt increase: Effortless retrieval of relevant data from private sources to easily add this information in prompts. This can help improve the quality and relevance of a model’s responses.

- Source attribution: Provides source attribution for the recovered data, so you can know where the information used in your responses comes from.

- Integration to AWS services: Allows easy integration with other AWS services, such as S3 buckets, Amazon Lex, containers, among others.

Additionally, Amazon Bedrock can be accessed directly in the AWS console for easy and quick end-to-end implementations. Another option is to create adaptable solutions using Python libraries, such as LangChain and LlamaIndex.

Bedrock allows easy adaptation for specific cases (such as use in other languages, creation of agents, multimodal recovery, etc.) by its big range of available resources and straightforward customization.

At ELDORADO, we already use solutions with Amazon Bedrock to accelerate business productivity. Therefore, we are qualified to apply these techniques along with AWS services aiming to help you in your business needs using Generative AI with security, privacy, and efficiency.

Figure: Illustration of a Chatbot operating with Amazon Bedrock and using AI-related articles as private information.